Manufacturing Analytics

Industrial Big Data Solution for Semiconductor

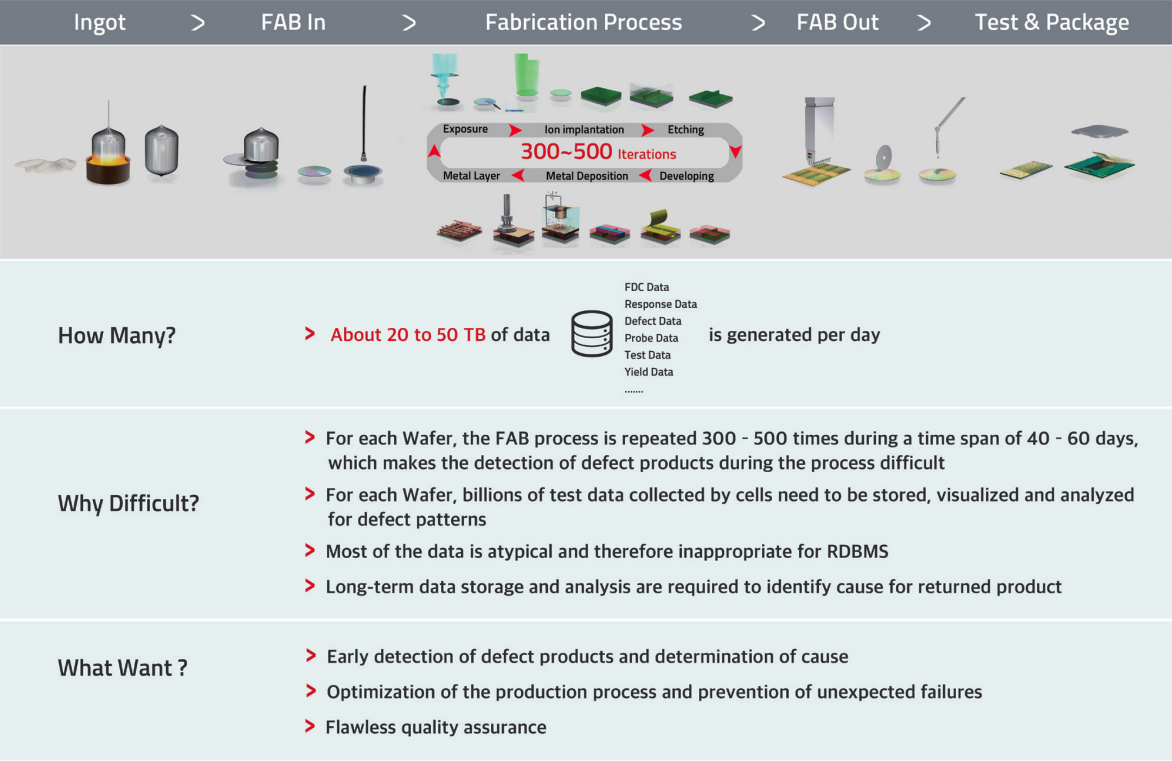

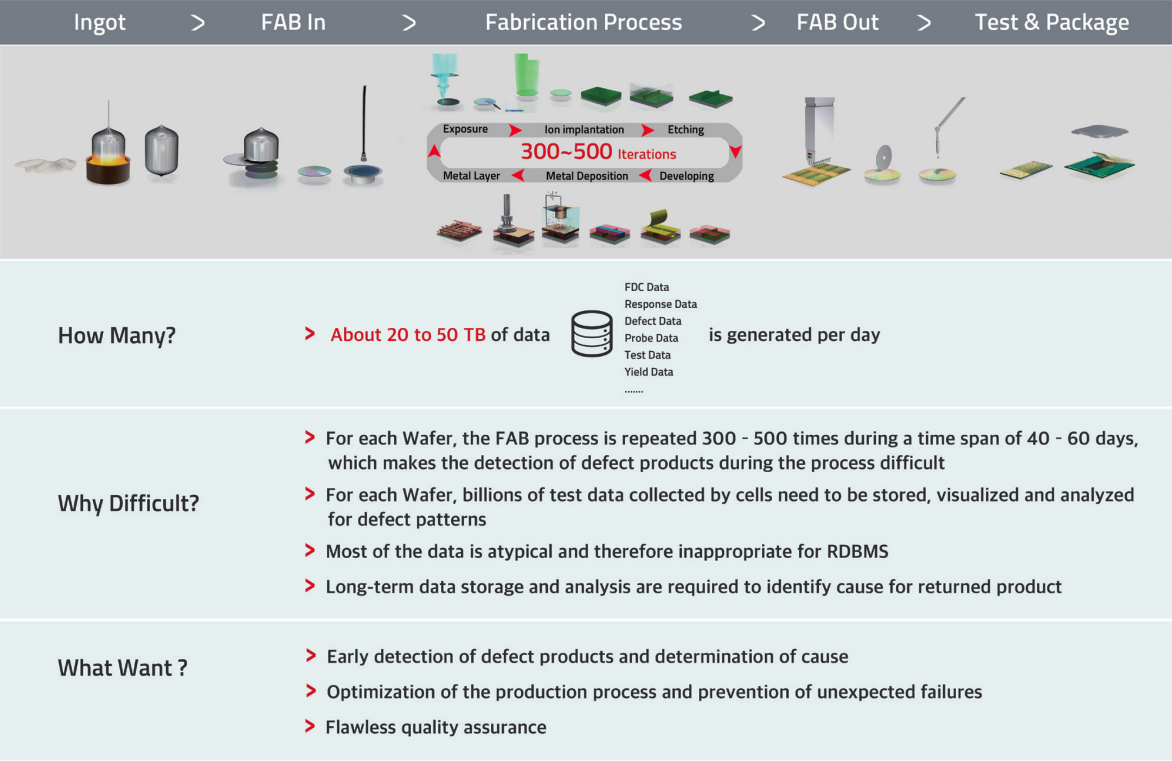

Silicon Wafers are important material in the manufacturing of semiconductor integrated circuits. Once the wafer is created, it is cut into chips and finally becomes a product after chip testing and packaging test. Lasting for 40 to 60 days, the semiconductor production process is a lengthy one, and the efficiency of the process will be maximized if defect wafers are detected during the process through a process data analysis rather than a total inspection. Production facilities are modularized and advanced. Plus, with thousands of machines installed and operating in the production process, inspection becomes all the more complex. Since the semiconductor process involves a wide range of processing, measurement and test equipment, the data generated also becomes unstructured and varied.

Challenges

Company A, an international semiconductor manufacturer, was experiencing an explosive growth of data due to increased number of FAB and outputs. However, it was difficult to add systems and storages because the capacity of the legacy system architecture was fairly limited. Even if adding had been possible, not all of the desired data would have been acquirable. It was impossible to keep pace with the rapidly growing data kept in storage. Also, fine-grained data measurement cycle was necessary in order to obtain an accurate and detailed yield management, fault detection and process control.

For this reason, it was no longer plausible to ingest the large volume of unstructured data generated during a complex process with traditional RDBMS System Architecture. Moreover, the slow process of the single node based analytical system led to dissatisfied users and inaccurate analysis results.

Case Study

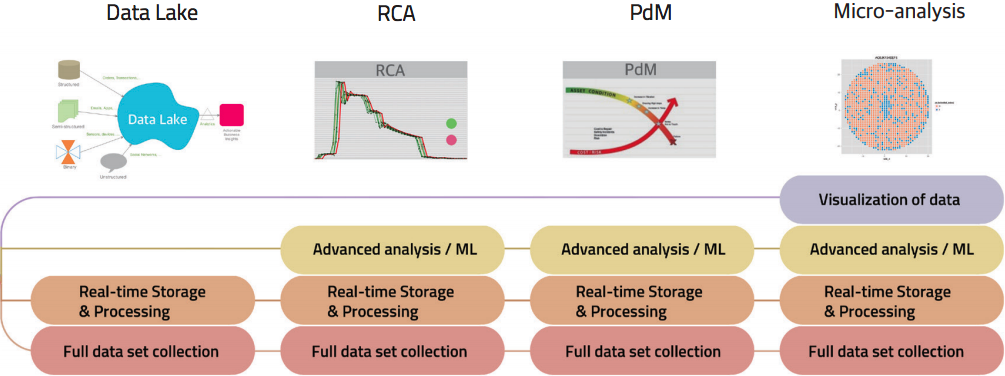

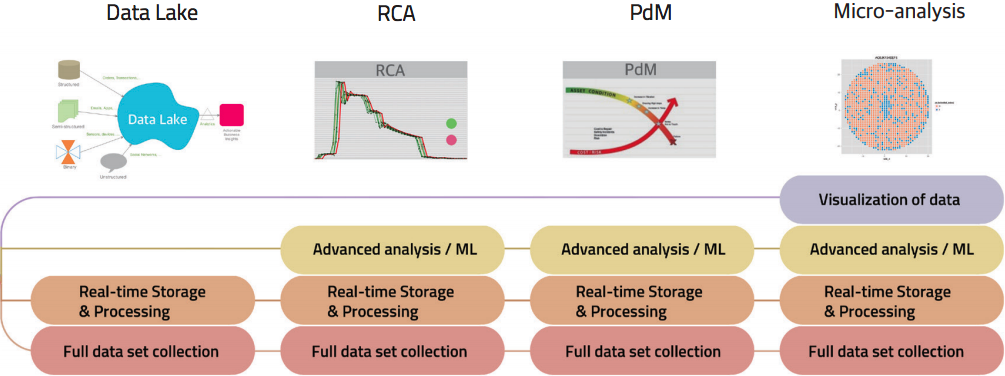

Company A’s FDC (Fault Detection & Classification), Probe, Test and Yield has been integrated with Metatron, and RCA (Root Cause Analytics) System, Micro-quality Analysis System and Predictive Maintenance System are currently being implemented or enhanced.

Data Lake Infra

The volume of dataset in company A is growing rapidly, but cost efficiency or the immediate scale-out of facilities in legacy systems were not ensured. Quick data store and search were also impossible, taking too much time to analyze.

The data type being very large-some even reaching tens of TBs-, it was saved in a DB after being transformed into compressed binary data to avoid excessive infra storage costs. Delays were caused in retrieving these compressed data from each analytical system and restoring it into raw data. The data stored in each silo by data and FAB led to data duplication, making it impossible to join or compare individual data or between FABs.

Data with low cost and high performance could be ensured with the integration of distributed data into a single place and saving the data in an uncompressed raw form. Saving full data set, long storage, and more detail analyzation of diverse data would also be possible. In the past, legacy systems such as APC (Automatic Process Control), Virtual Metrology and Yield Management System thad to transform compressed data to analyze. Even these legacy systems can now directly query the data through the Metatron interface, enhancing infra efficiency by reduced needed-time on data analysis and no space requirements for creating summary data for each system.

Root Cause Analysis System

RCA (Root Cause Analytics) system provides useful information for identifying which parameter is the root cause of a low yield equipment or process, after performing linkage analysis on source, yield and production data etc. acquired by equipment.

Company A also had a RCA system, but could only analyze some of the parameters on operations and recipes. Also, due to limitations in technology, cost and performance, it was limited to the use of already developed analysis only. For this reason, site managers could only utilize limited data and static analytical functions. Moreover, the system was seldom used in actual operations due to its slow speed.

Metatron was designed to perform parallel/distributed processing on algorithms commonly used in the manufacturing domain to offer a process speed that is several times or even tens of times faster than legacy systems. Whereas previously you could only analyze certain periods and parameters, you can now select the whole period or all parameters. It also enables PCA (Principal Component Analysis) / hostelling’s T square / DTW (Dynamic Time Warping) & K-medoid / Wavelet / Random Forest / Lasso / DB Scan / KNN (K-Nearest Neighbor) / PLS (Partial Least Squares) to be utilized as a major foundation of the distribution process, and this approach has been continuously expanded to accommodate the demands of customers. In terms of user interface, site users are provided with intuitive analytical systems and various analytical chart components to create a ‘data self-discovery’ environment.

Micro-Quality Analysis and Package Data Analysis System

During the production process, a set of 25 wafers goes through a manufacturing process commonly referred to as ‘LOT’. Once the front-end process of wafer manufacturing is complete, a number of tests are conducted to generate data and to confirm it with an analytical system. After overlapping the data for several Wafers, you can easily find the sector with statistically higher defect rate.

A piece of wafer is cut into thousands of chips, and a chip includes a bank with hundreds of millions of cells. In the past, it was only possible to perform a wafer-level analysis with legacy systems due to the required data processing capacity and rate, but thanks to the introduction of Metatron, you can now implement a micro analytical system at the chip level. With this system, you can check the test result in the smallest cell units represented as tens of millions of dots per wafer to see the quality information of the wafer in more detail. The defective pattern acquired through micro analysis can be linked with the FDC system to find improvements and problems during the process.

Metatron allows aggregation, storage/process and analysis on a large volume of data for micro-analysis and micro-quality analysis on semiconductors with SVG (Scalable Vector Graphics), which are capable of zooming in/out tens of times.

Predictive Maintenance System

Equipment maintenance of semiconductor process can be divided into PM (Periodic Maintenance) and BM (Breakdown Maintenance). Unlike planned PM, BM significantly affects the production schedules or yields by responding to breakdowns in abnormal and unpredictable ways. If the process is parallel, the failure of a certain equipment may not affect other processes. But for semiconductors, most of the process is in series – therefore, the failure of a certain equipment could lead to the failure of the whole process.

In a PdM(Predictive Maintenance) system, remaining life is predicted by applying AFT, RF, time series data analysis and the like through an ensemble method based on FDC data, measured data, yield data, maintenance history and so on. Here, major root causes are singled out and selected from a big number of factors through machine learning and deep neural network learning, notifying an unpredictable failure of equipment and deducing root causes so that site managers can quickly make judgments and take appropriate actions.

In order to take actions before BM occurs, the most important question is how quickly data can be analyzed in real-time and alerted. Metatron can predict time-series data faster than any other solutions.